03

2025

-

09

Lensless Imaging: The Camera's Coding Magic

Author:

In 1839, the Frenchman Daguerre presented the world's first practical photograph: a street scene in Paris. This daguerreotype camera, which ushered in a new era of humans recording the world with machines, had an exposure time of several minutes. Over the next two centuries, cameras underwent revolutionary changes: from film cameras requiring darkroom development to digital cameras that allow instant viewing; from capturing only black-and-white still images to capturing 4K and even 8K high-definition videos; from single-lens reflex cameras requiring professional skills to smartphone cameras accessible to everyone.

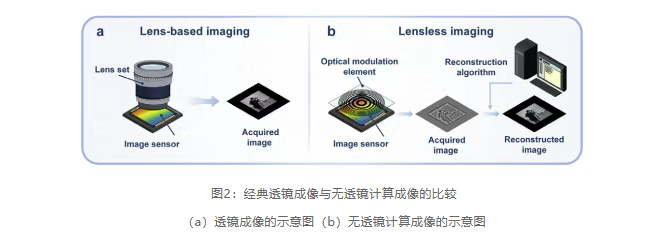

However, whether it was Daguerre's camera in 1839 or today's latest digital cameras, they all share a common core component: the lens. This seems obvious—how could a camera not need a lens? To form a clear image on the sensor from the light emitted or reflected by an object, a lens must refocus these diverging rays so that each point on the object corresponds precisely to a point on the sensor. Without a lens, the light would scatter chaotically across the sensor, resulting in only a blur.

Scientists have accomplished what seemed impossible: they have found a way to form images without lenses. This is the future being realized by lensless computational imaging technology. It replaces the century-old lens with coding and algorithms, initiating another revolution in imaging technology.

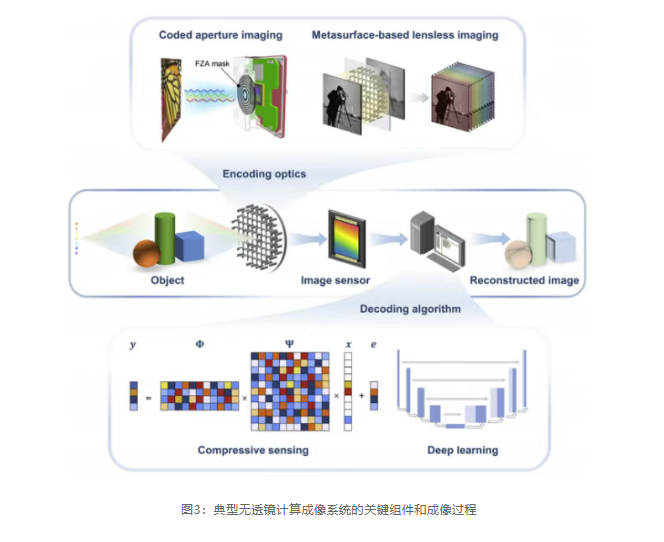

To obtain a complete photograph, one must know the position and brightness information of every point on the object—that is, where the light comes from and how strong it is. In traditional imaging, the lens's focusing function creates a one-to-one mapping between light from different positions and corresponding positions on the sensor. The lens can focus light because its curved surface alters the light's propagation path. However, coding elements do not have this focusing ability; they rely on computational imaging technology. Computational imaging combines optical coding and algorithmic decoding, so that light from each point on the object, after passing through the coding element, produces a unique and distinguishable response pattern. Although these responses overlap on the sensor, forming an apparently chaotic image, since we know the characteristics of each position in advance, algorithms can reverse-calculate and separate the contributions of each point, ultimately reconstructing a clear image.

Therefore, the coding element does not physically focus light but completes imaging in the computational domain through the process of coding-recording-decoding. The optical system is responsible for encoding information, and the computational system is responsible for decoding; their cooperation achieves lensless imaging.

So, what is coding, how is recording done, and how is decoding performed?

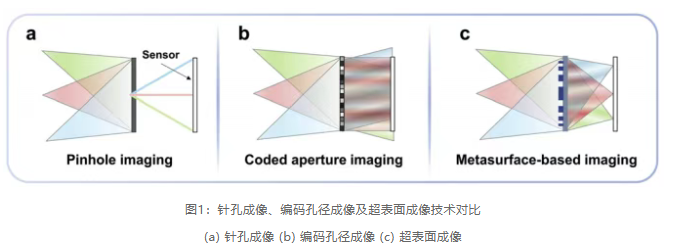

Without a lens, light directly illuminates the sensor, and light from each point on the object scatters across the entire sensor, causing blur. At this time, the coding element (such as a mask with a specific pattern, scattering medium, or nanostructured metasurface) modulates the incident light in a specific way, transforming point-to-point mapping into point-to-pattern mapping. In other words, in traditional imaging, each point on the object corresponds to a point on the sensor; in coded imaging, each point on the object forms an extended pattern on the sensor, such as diffraction rings or irregular speckles, and points at different positions produce different patterns.

The sensor records not a reduced image of the object but the superposition of all point patterns. Each pattern is a unique code carrying positional information. By pre-calibrating the pattern corresponding to each position, a computer can mathematically separate each position's contribution from the mixed signal and ultimately restore a clear image.

The DiffuserCam system developed by the University of California, Berkeley, uses an ordinary diffuser as the coding element, placed a few millimeters from the sensor. When light from a point source passes through the diffuser, it forms a complex but deterministic speckle pattern (caustic pattern). Point sources at different positions produce different speckles: lateral movement causes the pattern to shift, while axial movement changes the pattern size. Researchers obtained the system's point spread function (PSF) through pre-calibration, which is the speckle pattern corresponding to each spatial position. During actual imaging, the scene is encoded into a superposition of countless speckles, and compressed sensing algorithms can reconstruct clear two-dimensional images or even three-dimensional information from this seemingly chaotic image.

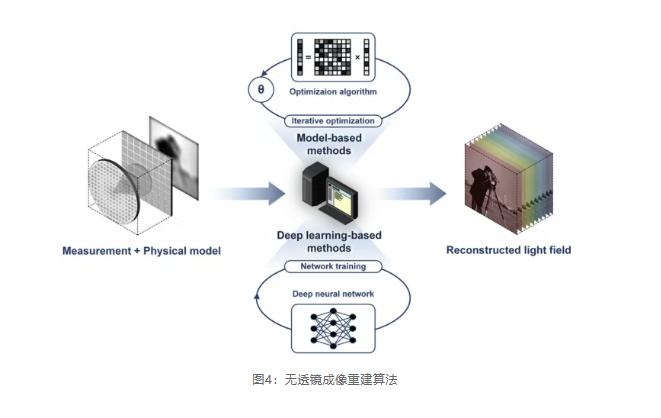

After discussing coding, next is how the computer restores clear images from these chaotic patterns. Mathematically, this process can be represented as a linear system: b = Φa + e, where b is the chaotic image measured by the sensor, a is the original scene we want to recover, Φ is the system matrix describing the pattern produced by each position, and e is noise. Decoding is the process of solving for a given b and Φ.

But this system has a problem: it is underdetermined, meaning there are more unknowns than equations. If the sensor has one million pixels but the scene to be recovered may have ten million points, it's like trying to solve 1000 unknowns with only 100 equations, theoretically resulting in infinitely many solutions.

This problem is solved by leveraging the characteristics of natural images. Natural images usually have sparsity, meaning most areas change very smoothly, with rich information only at edges. Compressed sensing exploits this by reconstructing sparse images from incomplete data.

However, traditional iterative algorithms take minutes to reconstruct an image, which is too slow for practical applications. The introduction of deep learning trains neural networks with thousands of pairs of coded and clear images to learn the mapping between them. After training, the neural network can complete reconstruction in milliseconds, achieving real-time imaging.

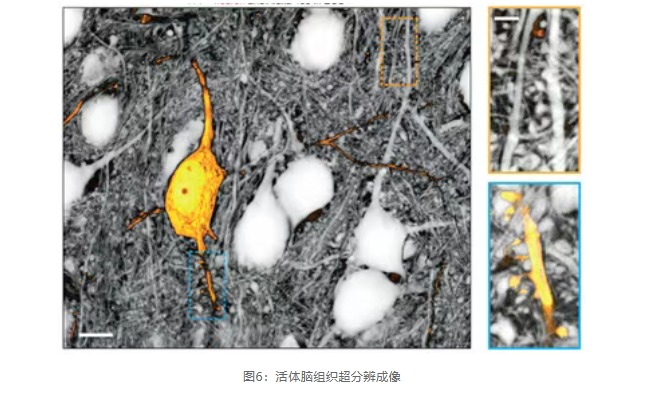

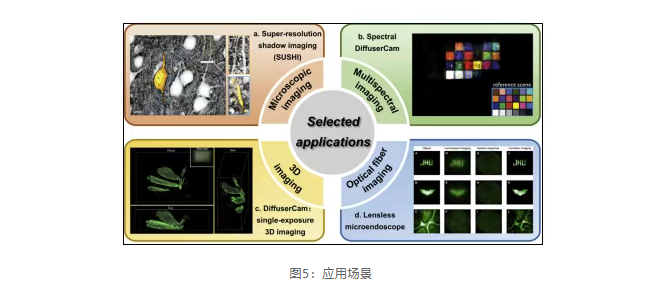

Lensless imaging technology has begun to move out of the laboratory and truly demonstrate its unique value in multiple fields. In microscopy, lensless computational imaging offers advantages such as a large field of view, high resolution, and portability, and has been widely applied in disease screening, environmental monitoring, and dynamic cell observation.

Lensless microscopy based on super-resolution algorithms has even broken the diffraction limit, enabling observation of ultra-fine structures in brain neural networks. This also shows that lensless imaging has found its unique position: it opens up new possibilities in the impossible triangle of traditional optics involving extreme sizes, multidimensional information acquisition, and large field-of-view high resolution.